No matter how well your website or blog is performing it is always good to perform website audit periodically. Here I am dong to describe one of the popular SEO tool which you can use for free of cost.

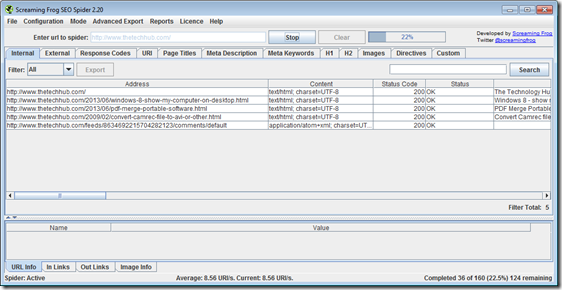

Express audits can be carried out using Screaming Frog SEO Spider . Its free version can be downloaded from here . As is common among the free versions, functionality and limited number of scanned pages (500 pages), but in most cases this is good enough.

How to start with Screaming Frog SEO Spider?

This program is very simple to use just run the executable and enter the name of the website you want to audit and press start button. You can see a progress indicator showing number of pages being scanned. The software starts to parse the addresses of all pages of the site, displaying a lot of information on each of them. It includes pictures and addresses, and the different files if they are on your site. To remove the extra information, you can simply sort the page address by type.

Finding Useful Information

You can see various information is categorized in different tabs. Here is what you can get inside the screen :

- Internal (internal links) - the first tab with the basic information about the web site. It is divided into columns, which can be sorted alphabetically (for numbers - from high to low and vice versa) one click on the name of the column.

- External (external link) - a list of resources that are to your site are open links.

- Codes response - shows the HTTP headers pages. I usually sort them in descending order (by clicking on the name of the column), so stay on top of 5XX, 4XX, 3XX, and then 200. Here are the first three types should pay particular attention. For further analysis and report can be exported to Excel (format csv) separately or together (such a function on each tab).

- URl (problematic URLs) - it contains the problematic website address:

- With the characters are not in ASCII .

- With an underscore (this is, of course, is not considered a violation of the rules of the SS, but still use hyphens "-" in the addresses preferable, as they share the word, and underscores - no)

- Duplicate pages - there is, in principle, everything is clear: war is tanned !

- Dynamic addresses - their website is better not to use it because they are, first, not friendly, and secondly - create duplicate content.

- URLs longer than 115 characters - the shorter address, the better and easier, so do not exceed 115 characters in the address. For example, here's such an address for sure does not look

- Page Titles (Titles pages) - a very useful tab with the titles of pages and information about them. Here you can find:

- Pages with no titles. If these pages are important for promotion, titles to add mandatory.

- Pages with the same titles. Rule: The headings should be in-ni-kal-on-mi. By the way, very often the titles are the same for duplicate pages, so the list of pages with identical titles - one of the keys to finding duplicates on this site.

- Titles, longer than 70 characters. Until recently, the common length of a title (for Google) is 60-70 characters. But it seems that now the title is not measured in characters, in pixels.

- Page titles the same as H1 headings. This should not be, because each of these elements on the page performs its role in optimizing pages for specific requests. Of course, H1 and Title linked by a single theme, but they have to disclose it from different sides. Unfortunately, some CMS title is automatically taken from the header H1, here is a question for the developers.

- Description meta (meta description) - information about the meta tag, Description, as well as its length, and a list of pages with duplicate or missing meta descriptions.

- Keywords meta - what can I say, it is better not to prescribe. This tab can be skipped.

- H1 - the headers H1, even better, of course, on each page to have one such header (that is not necessarily due to the configuration CMS).

- H2 - H2 on the headers of each page.

- Images - pictures site and list their weight. It is useful for the analysis and the potential increase in the speed of loading a website by optimizing images .

- Meta & Canonical - this tab is very important because it shows the meta robots and rel = canonical pages.

After reviewing all of the data from these tabs, we can save them in Excel file and is based on information received to identify weaknesses site and get rid of them. Screaming Frog will get all the basic information about the site and analyze its structure, meta data, titles, and even find duplicates of content. Understand it easily enough, and then the analysis of the site will take no more than 15-20 minutes.

0 Comments:

Post a Comment